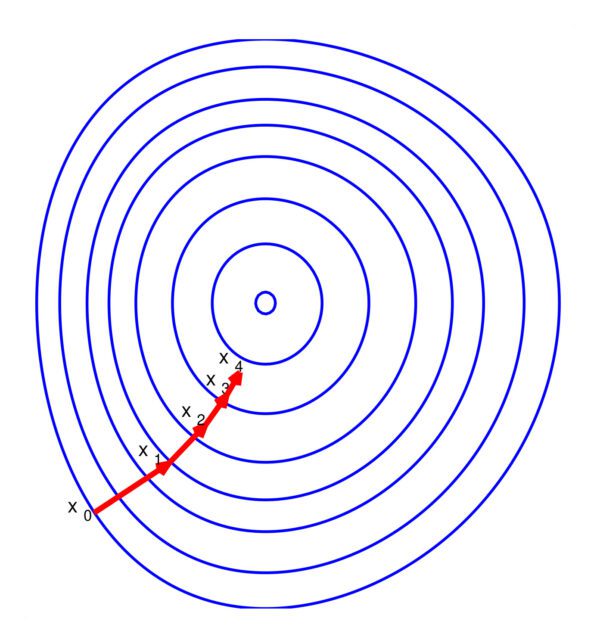

Data science, Machine Learning, or any Mathematical Optimization related technical interviews encounter the most common question on one of the properties of the method of Steepest Descent. It is also called Gradient Descent method. The successive directions of the steepest descent are normal to one another. They ask for proof or some example to explain this property. In this article, I start with the proof and give a simple example to describe it –

Let ![]() is the starting point of the iterative Steepest descent method to solve

is the starting point of the iterative Steepest descent method to solve ![]() whose

whose

extremum is ![]() .

.

We update the iterative point as follows:

Next successive point ![]()

The new point is a function of ![]() , the step size.

, the step size.

If ![]() is the function of

is the function of ![]() that decides the new point, the useful value of

that decides the new point, the useful value of ![]() can be calculated by setting

can be calculated by setting ![]()

Note, derivative is w.r.t to ![]() ,

,

![]()

![]()

![]()

If we further simplify

![]()

Now we have,

![]()

![]()

From above it looks clear that the product of successive gradients is = 0. Hence from the basic definition of gradient, successive gradients are orthogonal to each other.

Note: ![]() means derivative of

means derivative of ![]() at point

at point ![]()

Understand with one simple example

From example, ![]() is a function of two variables.

is a function of two variables.

Starting point ![]() is given.

is given.

Now, gradient of ![]() at

at ![]() is

is

(1) ![]()

According to Steepest Descent rule, new update point

i.e.,

So,

Note, new point

Function value at new point will also be a function of

Now, gradient of

(2) ![]()

We set

Now new point we have is

From 1 and 2 we have,![]() and

and ![]()

The dot product of two vectors:

![]()

Also read Column Generation Method for Cutting Stock Problem